11. Backpropagation Quiz

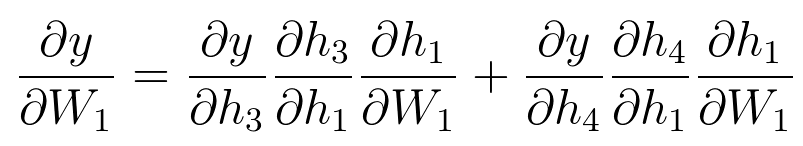

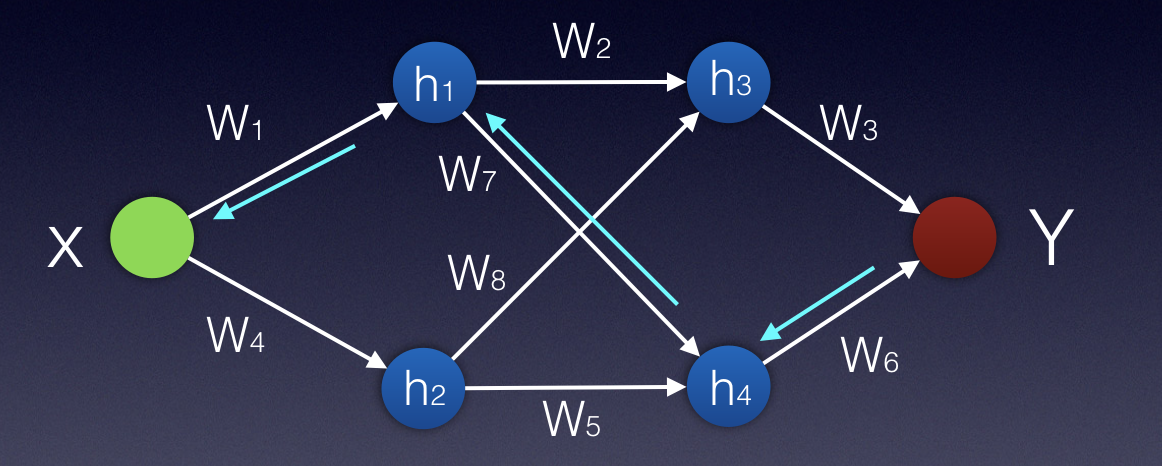

The following picture is of a feedforward network with

- A single input x

- Two hidden layers with two neurons in each layer

- A single output

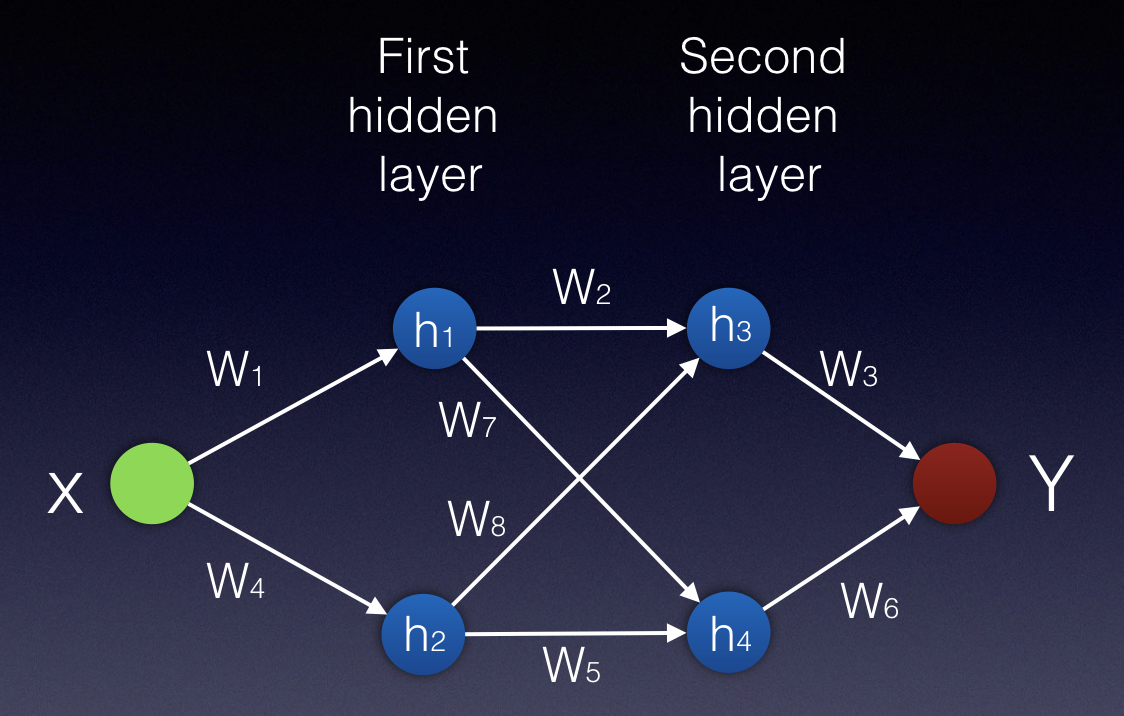

SOLUTION:

Equation A

Solution

There are two separate paths which W_1 contributes to the output in:

- Path A

- Path B

(both displayed in the pictures below)

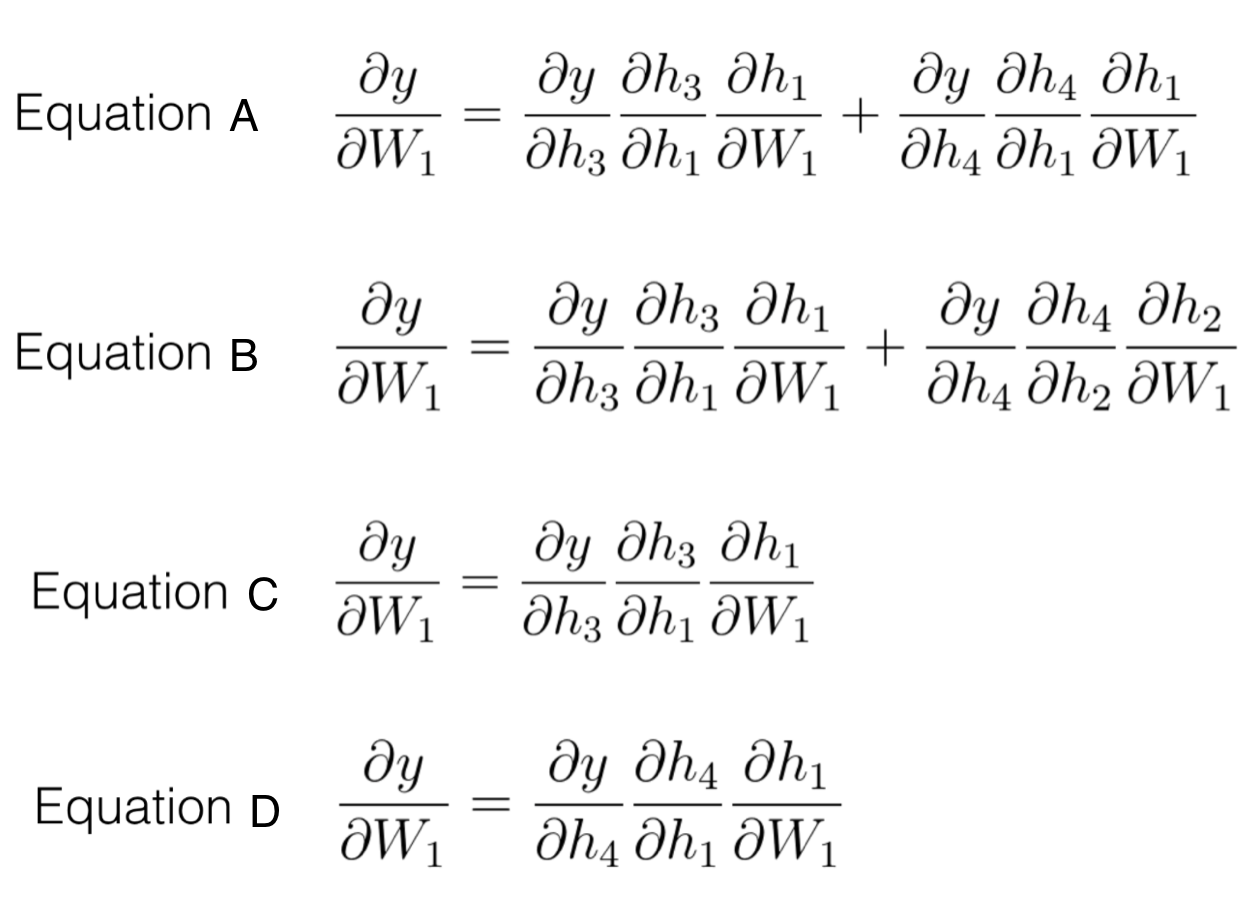

Path A

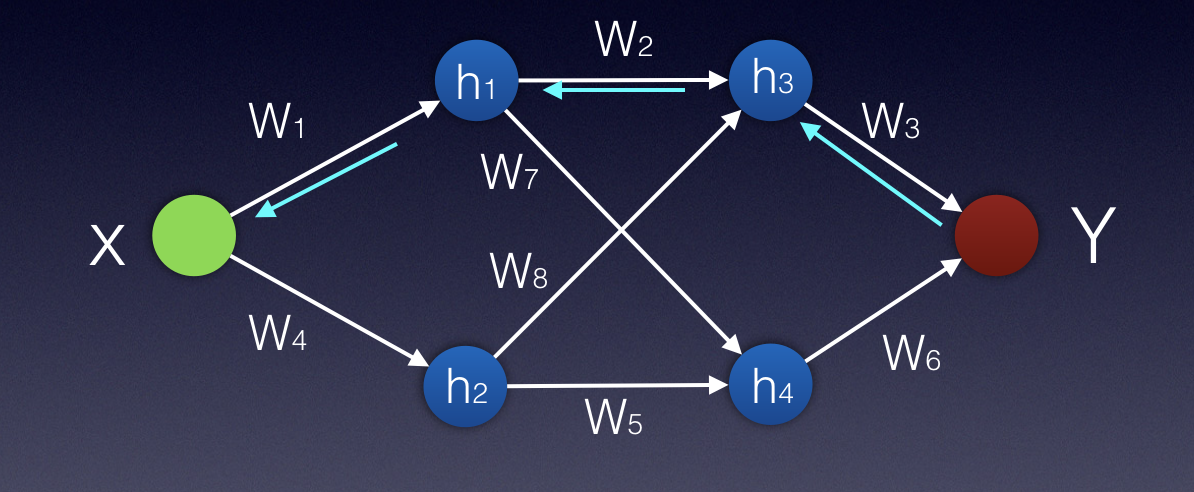

Path B

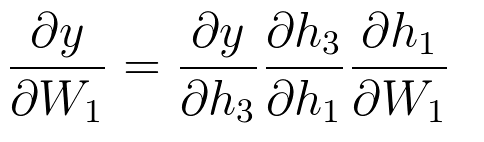

The mathematical derivations considering path A (while applying the chain rule) are:

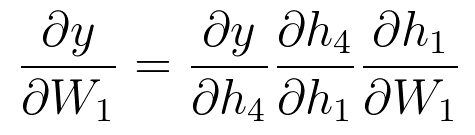

The mathematical derivations considering path B (while applying the chain rule) are:

To finalize our calculations we need to consider all of the paths contributing to the calculation of y. In this case we have the two paths mentioned. Therefore, the final calculation will be the addition of the derivatives calculated in each path.